What Are The 4 Danger Levels In Risk Management?

When enterprises and traders set monetary objectives, they all the time face the risk of not attaining them. Based on this score saas integration, we all know that this problem (10% of customers departing) is having quite an effect on the health of the enterprise. Organizations that embrace this proactive mindset place themselves to handle uncertainties more successfully, finally main to higher outcomes and sustained success. Additionally, being aware of frequent pitfalls can prevent expensive mistakes and assure a more resilient strategy to managing risks.

Trump Scores Win After Two Republicans Again Johnson In Last-minute Change

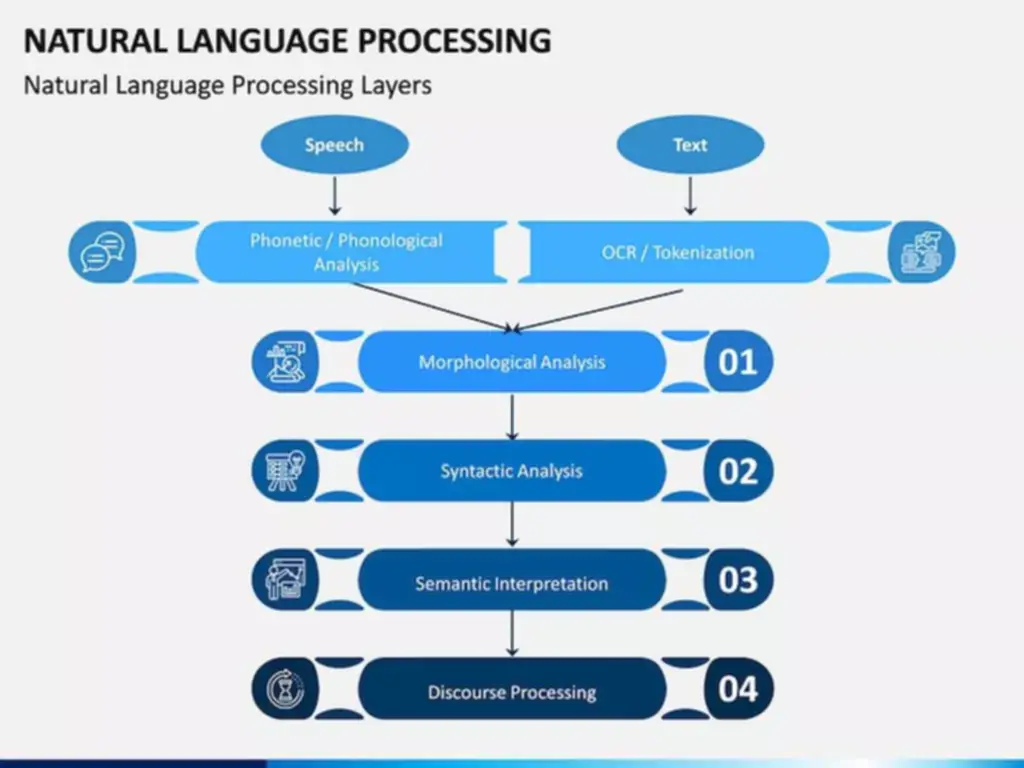

Risk evaluation consists of using tools and strategies to determine the probability and impact of project dangers which have been previously identified. Therefore, danger evaluation helps project managers decipher the uncertainty of potential risks and the way they might impact the project in terms of schedule, quality and costs if, in reality, they were to indicate up. Risk evaluation risk impact definition isn’t exclusive to project management and it’s utilized in other disciplines similar to business administration, construction or manufacturing. Effective threat administration involves thorough risk impression assessments and common danger assessments, enabling project groups to identify risks and evaluate their probability and potential impact. By mitigating danger, organizations can enhance enterprise continuity and assure compliance with regulatory necessities. In the fast-paced and ever-changing landscape of project management, risks are an inevitable a half of any enterprise.

Creating A Threat Analysis Framework

This foresight not solely protects property but in addition fosters a tradition of preparedness. Many organizations fall into common pitfalls that can undermine their danger management efforts. A business impression evaluation (BIA) identifies the potential effects of disruptions on an organization’s operations. After prioritization, developing specific methods to mitigate or manage these dangers is important.

Why This New Way Of Calculating Critical Danger Is So Important On Your Crisis Readiness

For example, consider assessing risk for driving to Disney World on a family trip. This method, the entire ecosystem, variables and conditions are accounted for, supplying you with probably the most complete understanding of the danger and its attainable impacts. Identifying – as well as assessing and mitigating – dangers is not a one-time exercise but an ongoing learning course of that requires re-evaluating dangers as the project (or policy) develops. Strategic risks are those that come up from exterior factors corresponding to changes in the market, competitors, or expertise. Operational dangers, then again, are inner dangers that come up from the day-to-day operations of the group. By figuring out dangers early, organizations can implement strategies that forestall points from escalating.

Assessing And Prioritizing Dangers

There are a quantity of risk evaluation methods that are supposed to help managers by way of the analysis and decision-making course of. Some of these involve the use of threat analysis tools corresponding to charts and documents. Quantitative risk evaluation counts the possible outcomes for the project and figures out the chance of still meeting project aims.

Some of these involve using risk evaluation tools similar to project administration charts and paperwork. Technology enhances threat administration methods by automating data assortment, improving analysis accuracy, and enabling real-time monitoring. It empowers groups to identify potential threats quickly, streamline communication, and make knowledgeable decisions, ultimately decreasing general threat publicity.

Creating a danger register often entails a number of, reliable data sources such because the project staff, subject matter experts and historic information. Moreover, efficient danger management also can help organizations save prices in the long term. By figuring out and addressing potential risks early on, organizations can keep away from expensive damages and losses that will happen if the risks are left unaddressed. Additionally, having a robust risk administration plan in place also can assist organizations comply with authorized and regulatory requirements, which might help keep away from legal penalties and reputational harm.

Finally, it helps enhance organizational resilience and flexibility in the face of potential dangers. Organizations that constantly prioritize risk management are better positioned to navigate challenges and seize alternatives. By integrating a strong danger management framework, businesses can enhance resilience and guarantee long-term success. Effective danger management isn’t a one-time effort however a continuous process requiring common evaluation and adaptation. By growing a thorough danger administration plan, organizations can identify, assess, and prioritize risks systematically.

Our scoring is finished once we select a level of Impact (1 to 5), and a degree of probability (1 to 5). Whatever the rationale, the Strategic Risk Severity Matrix is a incredible device that can assist you make a data-driven dedication. In this publish, I’ll walk you through each step of using this tool, along with a practical instance to reveal how it works. I was recently asked to clarify the “Impact Score” in a Strategic Risk analysis process. Additionally, sustaining communication among team members fosters accountability and encourages proactive problem-solving.

Others look to identify dangers inside a given operation and convey awareness to leadership. Additionally, technology has made it easier for organizations to communicate and collaborate with stakeholders concerned in threat administration. Cloud-based platforms and cellular functions allow for real-time sharing of data and updates, making it easier for groups to work together and make informed selections. Implementing a well-structured danger management plan not only safeguards resources but in addition enhances total operational efficiency. Implementing danger mitigation measures is essential, as these methods help reduce potential adverse impacts.

- To make the rating easier to know, you can multiply them by a certain value (e.g. 100).

- Despite the challenges involved, a proactive approach to danger management can significantly improve an organization’s capability to navigate uncertainties and safeguard its aims in opposition to potential threats.

- Whatever the reason, the Strategic Risk Severity Matrix is a incredible device that can assist you make a data-driven determination.

- It’s meant to be used as input for the risk administration plan, which describes who’s responsible for these dangers, the danger mitigation strategies and the resources wanted.

This is the place the chance register comes in as it is the key to prioritizing your risks. The danger team documents the danger score and reviews it to the decision-makers for taking applicable action. Given the very high threat rating, the organization decides to implement advanced cybersecurity measures, conduct common system audits, and supply worker coaching to attenuate the probability and influence of a cyber attack. This threat register template has every thing you should hold track of the potential dangers that might affect your project in addition to their chance, impact, standing and extra. To help, we’ve prepared some free risk analysis templates that will assist you through the risk analysis course of. Once risks have been recognized, assessed and prioritized, they need to be mitigated.

This involves updating the danger assessments primarily based on new information, adjustments within the operating setting, and the effectiveness of implemented controls. Qualitative threat evaluation is the base for quantitative danger evaluation and reduces project uncertainty whereas specializing in high-impact dangers. Get began with qualitative threat analysis with our free risk assessment template. The Delphi technique includes a panel of specialists on topics that are important to your project threat.

Implementing risk mitigation measures ensures that potential points are addressed proactively, decreasing the chance of opposed impacts. Objective assessments get rid of biases that can skew perceptions of threat, promoting transparency and fostering belief amongst stakeholders. Furthermore, they supply a stable basis for strategic planning, enabling organizations to allocate assets effectively. Ultimately, a well-executed enterprise impact analysis enhances an organization’s resilience by enabling proactive management of potential disruptions. It helps organizations understand how completely different dangers can affect their processes and prioritizes recovery efforts accordingly. High-scoring risks demand immediate consideration, guiding the development of appropriate mitigation strategies.

Ashley has served as the Editor of month-to-month all employee publications by managing the planning, writing and manufacturing. She is an integral a part of new product launches and is frequently engaged to coach complete sales teams along with channel / distribution partners on new product launches. Passionate about serving law enforcement and bridging the belief divide between businesses and the communities they serve, Melissa is a former member of the International Association of Chiefs of Police (IACP). In 2021 she co-chaired a committee tasked with growing a method and plan of motion to start resolving the belief crisis in the united states Erick has also trained with the Center for Disease Control (CDC) in Infectious Disease Planning and neighborhood response, including Point of Dispensing initiatives.

Transform Your Business With AI Software Development Solutions https://www.globalcloudteam.com/ — be successful, be the first!